With the official release of Llama 4, Meta’s newest and most sophisticated suite of AI models, the field of multimodal artificial intelligence has redefined what is feasible. With this release, Meta establishes itself as a key participant in the competitive AI arms race while continuing to push the limits of open-source AI innovation.

What Is Llama 4?

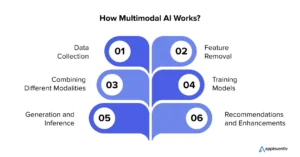

Llama 4 Scout and Llama 4 Maverick are the two recently released models in the Llama 4 family. Llama 4 Behemoth, a third powerful model, will be published soon. These AI models are real multimodal AI systems because they are designed to comprehend and produce across a variety of data kinds, including text, images, video, and audio.

Although there are certain regional constraints, Scout and Maverick are both freely available under an open-source license, enabling developers, academics, and organizations to test out the latest in artificial intelligence (AI).

Multimodal Capabilities and Advanced Performance

Compared to earlier Llama versions, Llama 4 Scout and Maverick demonstrate notable improvements in reasoning, context awareness, and data processing, providing superior performance on challenging multimodal tasks.

These models are useful for use cases in customer support, content creation, data analysis, and interactive virtual environments since they are made to easily integrate and analyze content across numerous formats.

The Coming of Llama 4 Behemoth

Scout and Maverick are at the forefront, while Meta’s most ambitious model to date is Llama 4 Behemoth. Behemoth is being created as a “teacher” AI, which might train other models and establish new standards for AI performance and reasoning. It is anticipated to be one of the most intelligent large language models to date.

Tackling Development Challenges

Launching Llama 4 wasn’t a straightforward process. When compared to rivals like OpenAI, the models reportedly performed poorly at first in critical domains including voice interactions, logical thinking, and arithmetic.

Meta used a mixture of experts (MoE) strategy, which separates the model into specialized units that activate selectively, to get around these problems. This increases the AI’s efficacy and efficiency without using exponential amounts of processing power.

Meta’s $65 Billion AI Infrastructure Push

Llama 4’s release by Meta is a component of a significant investment strategy, not merely a model release. In keeping with its goal of dominating the AI market, the corporation intends to invest up to $65 billion in AI infrastructure. This entails building next generation GPUs, growing data centers, and assisting open research communities.

Licensing and Regional Restrictions

Although Llama 4 models are open source, they have certain licensing limitations. Due to changing AI legislation and data protection laws, companies with more than 700 million monthly active users need to secure special authorization, and usage is prohibited in the European Union.

Conclusion

An important milestone in open source AI development, especially in the field of multimodal learning, has been reached with Meta’s release of Llama 4. In addition to demonstrating Meta’s technological brilliance, these models, which are starting to power next generation applications, also portend a time when AI systems will be more widely available, more competent, and incorporated into regular digital encounters. Since Meta Llama 4 is changing the face of AI innovation, it is imperative that developers, researchers, and tech enthusiasts keep a watch on it.

One Response

THATS NICE